Download the PHP package google-gemini-php/laravel without Composer

On this page you can find all versions of the php package google-gemini-php/laravel. It is possible to download/install these versions without Composer. Possible dependencies are resolved automatically.

Download google-gemini-php/laravel

More information about google-gemini-php/laravel

Files in google-gemini-php/laravel

Package laravel

Short Description Google Gemini PHP for Laravel is a supercharged PHP API client that allows you to interact with the Google Gemini AI API

License MIT

Informations about the package laravel

Gemini PHP for Laravel is a community-maintained PHP API client that allows you to interact with the Gemini AI API.

- Fatih AYDIN github.com/aydinfatih

- Vytautas Smilingis github.com/Plytas

For more information, take a look at the google-gemini-php/client repository.

Table of Contents

- Prerequisites

- Setup

- Installation

- Setup your API key

- Upgrade to 2.0

- Usage

- Chat Resource

- Text-only Input

- Text-and-image Input

- File Upload

- Text-and-video Input

- Multi-turn Conversations (Chat)

- Stream Generate Content

- Structured Output

- Function calling

- Count tokens

- Configuration

- Embedding Resource

- Models

- List Models

- Get Model

- Chat Resource

- Testing

Prerequisites

To complete this quickstart, make sure that your development environment meets the following requirements:

- Requires PHP 8.1+

- Requires Laravel 9,10,11,12

Setup

Installation

First, install Gemini via the Composer package manager:

Next, execute the install command:

This will create a config/gemini.php configuration file in your project, which you can modify to your needs using environment variables. Blank environment variables for the Gemini API key is already appended to your .env file.

You can also define the following environment variables.

Setup your API key

To use the Gemini API, you'll need an API key. If you don't already have one, create a key in Google AI Studio.

Upgrade to 2.0

Starting 2.0 release this package will work only with Gemini v1beta API (see API versions).

To update, run this command:

This release introduces support for new features:

- Structured output

- System instructions

- File uploads

- Function calling

- Code execution

- Grounding with Google Search

- Cached content

- Thinking model configuration

- Speech model configuration

\Gemini\Enums\ModelType enum has been deprecated and will be removed in next major version. Together with this Gemini::geminiPro() and Gemini::geminiFlash() methods have been removed.

We suggest using Gemini::generativeModel() method and pass in the model string directly. All methods that had previously accepted ModelType enum now accept a BackedEnum. We recommend implementing your own enum for convenience.

There may be other breaking changes not listed here. If you encounter any issues, please submit an issue or a pull request.

Usage

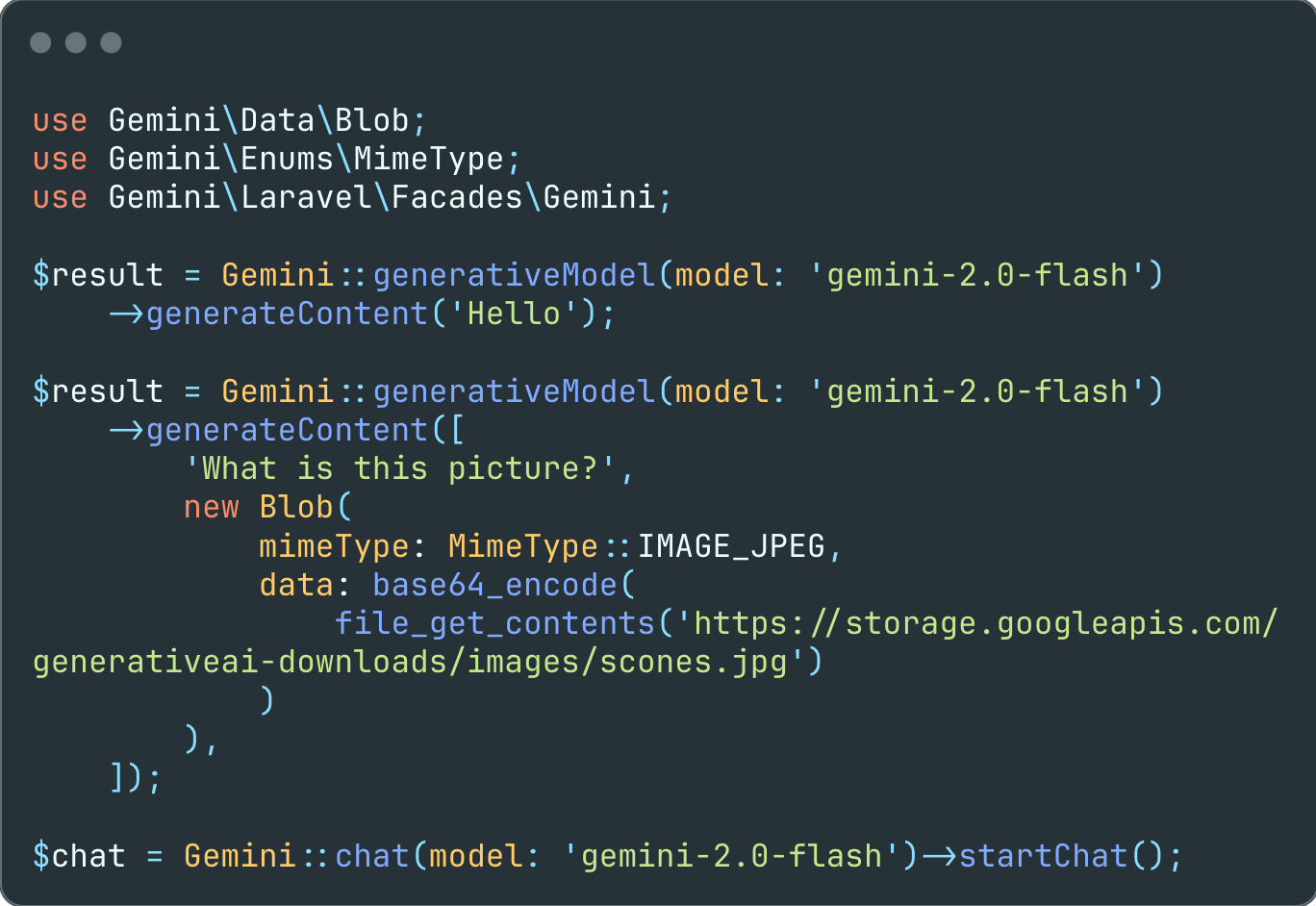

Interact with Gemini's API:

Chat Resource

For a complete list of supported input formats and methods in Gemini API v1, see the models documentation.

Text-only Input

Generate a response from the model given an input message.

Text-and-image Input

Generate responses by providing both text prompts and images to the Gemini model.

File Upload

To reference larger files and videos with various prompts, upload them to Gemini storage.

Text-and-video Input

Process video content and get AI-generated descriptions using the Gemini API with an uploaded video file.

Multi-turn Conversations (Chat)

Using Gemini, you can build freeform conversations across multiple turns.

Stream Generate Content

By default, the model returns a response after completing the entire generation process. You can achieve faster interactions by not waiting for the entire result, and instead use streaming to handle partial results.

Structured Output

Gemini generates unstructured text by default, but some applications require structured text. For these use cases, you can constrain Gemini to respond with JSON, a structured data format suitable for automated processing. You can also constrain the model to respond with one of the options specified in an enum.

Function calling

Gemini provides the ability to define and utilize custom functions that the model can call during conversations. This enables the model to perform specific actions or calculations through your defined functions.

Count tokens

When using long prompts, it might be useful to count tokens before sending any content to the model.

Configuration

Every prompt you send to the model includes parameter values that control how the model generates a response. The model can generate different results for different parameter values. Learn more about model parameters.

Also, you can use safety settings to adjust the likelihood of getting responses that may be considered harmful. By default, safety settings block content with medium and/or high probability of being unsafe content across all dimensions. Learn more about safety settings.

Embedding Resource

Embedding is a technique used to represent information as a list of floating point numbers in an array. With Gemini, you can represent text (words, sentences, and blocks of text) in a vectorized form, making it easier to compare and contrast embeddings. For example, two texts that share a similar subject matter or sentiment should have similar embeddings, which can be identified through mathematical comparison techniques such as cosine similarity.

Use the text-embedding-004 model with either embedContents or batchEmbedContents:

Models

We recommend checking Google documentation for the latest supported models.

List Models

Use list models to see the available Gemini models:

Get Model

Get information about a model, such as version, display name, input token limit, etc.

Testing

The package provides a fake implementation of the Gemini\Client class that allows you to fake the API responses.

To test your code ensure you swap the Gemini\Client class with the Gemini\Testing\ClientFake class in your test case.

The fake responses are returned in the order they are provided while creating the fake client.

All responses are having a fake() method that allows you to easily create a response object by only providing the parameters relevant for your test case.

In case of a streamed response you can optionally provide a resource holding the fake response data.

After the requests have been sent there are various methods to ensure that the expected requests were sent:

To write tests expecting the API request to fail you can provide a Throwable object as the response.

All versions of laravel with dependencies

google-gemini-php/client Version ^2.0

laravel/framework Version ^9.0|^10.0|^11.0|^12.0